Dead or 'unalive'? How social platforms — and algorithms — are shaping the way we talk

Algorithm-based content moderation is influencing new language online

You might be familiar with the terms "seggs" and "unalive" if you've spent time on social media platforms, like TikTok and Instagram.

They're a part of what's called "algospeak," a kind of coded language used on social media to protect content from getting removed or flagged by algorithm-based moderation tools.

For example, a commenter might write "seggs" or "unalive" to avoid using sex or kill — words that could be censored by moderation. Others might use the eggplant emoji in place of the word penis, or write "S.A." instead of "sexual assault." Similarly, the watermelon emoji has become a common way to show support for Palestinians and Gaza when discussing the Israel-Hamas war.

Social networks have different guidelines outlining what's acceptable to post, leaving users to find creative workarounds.

Adam Aleksic says people finding ways to get around publishing restrictions is nothing new.

"What is new is the medium and the fact that this algorithmic infrastructure is in place governing how we're speaking to each other," he told The Sunday Magazine host Piya Chattopadhyay.

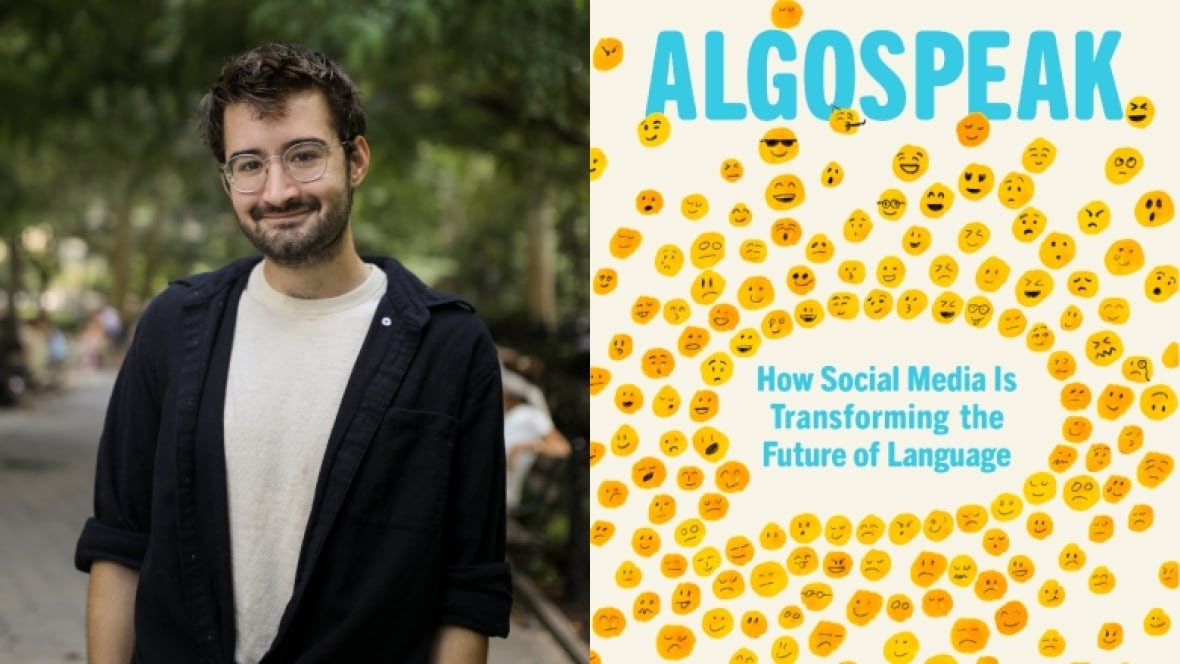

Aleksic is a linguist and influencer known to millions as @etymologynerd. His new book, Algospeak: How Social Media Is Transforming the Future of Language, explores how algorithms and online creators shift the way people communicate.

He compares the relationship between social media platforms and users to a game of Whac-A-Mole.

"The algorithm is producing more language change in this kind of never-ending cycle of platforms censoring something and people finding new ways to talk about it," he said.

In another example, Chinese internet users have taken to using the word for "harmony" as a way of describing government censorship online. It's meant as a reference to the Chinese government's goal of creating a harmonious society. When that and a similar-sounding replacement phrase, "river crab," were banned, people started saying "aquatic product," Aleksic said.

The author also says that some content creators self-regulate their speech based on what they believe the algorithm will punish.

Social media speech regulations

Aleksic says content moderation systems and algorithms are programmed using large language models, but how those inputs are assessed remains a mystery.

"The engineers themselves call it a black box because they don't understand what goes in," Aleksic said. "There's so many parameters that you can't actually say why it outputs something."

Jamie Cohen, a digital culture expert and professor at CUNY Queens College in New York, says content moderation rules on social media platforms are intended to reduce harm and classify what's acceptable speech.

Those safety guidelines are meant to protect users, he says, and removing them comes with consequences.

Cohen points to X, formerly known as Twitter, which introduced less restrictive speech policies when Elon Musk bought the company in 2022, reflecting his self-proclaimed "free-speech absolutist" position.

"Algospeak, or internet language in particular, it's about [reducing] harm," he said. "X is what a site looks like without algospeak, that's what it looks like when you remove the borders of forms of acceptable speech."

Earlier this month, X's AI-powered chatbot Grok posted offensive remarks, including antisemitic comments, in response to user prompts. Musk said that Grok, following an update to its algorithm, was "too compliant to user prompts," and that the issue was being addressed.

Culture and community

Finding new ways to communicate is a human activity that neither robots or AI can do, Cohen says, adding that algospeak is a binary language where a word can have multiple meanings.

"Communication requires knowledge, nuance and a complex understanding of other people's use of the language."

Aleksic argues that language is a proxy for culture, which allows people to share common realities and foster empathy. He says algospeak influences the popularity of memes, trends and the formation of in-groups.

"Children are using language as a way of building identity, of differentiating themselves from adults and forging a shared commonality," he said.

Internet culture often overflows into the real world, Cohen says — and when users hear social media language used offline, it can help identify people with similar interests.

Tumblr, a social network popular in the late 2000s and 2010s, played a big role in language formation, especially among women, he says.

But Cohen pushes back against the idea that algospeak is a corruption of language. In fact, he celebrates it.

"I find algospeak beautiful because it's not only developing community and support groups, but people will know that they share language."

Interview with Adam Aleksic produced by Andrea Hoang